D3 Embedded – “Ewaste-Net”: Everything It Touches Turns Into Gold

Author

- Robert Ke

- Shengjie(Jason) Wang

- Rong Gu

- Yujun Sun

- Yuyang Wang

Sponsor

D3 Embedded

Instructor

Professor Ajay Anand

Professor Cantay Caliskan

Abstract

Our project tackles the growing mountain of ewaste by turning simple photos of devices into csv data. We combined three image collections—Ambient-125, Ewaste-70, and TextOBB-1681—and trained an You Only Look Once(YOLO) model to locate every label on a gadget. After cropping those regions, the lightweight Qwen optical-character-recognition(OCR) model swiftly transcribed serial numbers and model names; line-level crops ran more than twice as fast as block crops and cut the character-error rate to roughly 1, which is a pretty decent result. The detector achieved an 0.89 F1 score, and the full pipeline kept average word-error below 1.1 while producing clean text for over 1,600 images. In future, a large language model can even convert the raw strings into structured fields—brand, model, production date—and estimate each item’s resale or recycling value. The result is an end-to-end “Ewaste-Net” engine that can help recyclers quickly sort and monetize e-waste at scale.

Introduction

In today’s fast-paced world, companies like Apple release new phones every year, prompting many consumers to replace their devices long before they reach the end of their usable life. This cycle generates an enormous amount of electronic waste (e-waste), creating significant environmental challenges.

Our project addresses this problem by leveraging YOLO and large language models to develop and train two specialized models. These models combined are designed to extract textual information from electronic waste items. By successfully retrieving text data, we lay the foundation for future work: analyzing and classifying e-waste based on extracted information, determining whether and how products can be recycled.

This technology has the potential to greatly reduce human labor in the e-waste recycling process, and our project forms a critical first step toward building a smarter, more efficient recycling solution.

Method and Workflow:

Results:

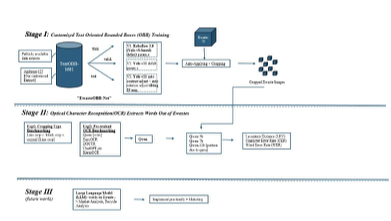

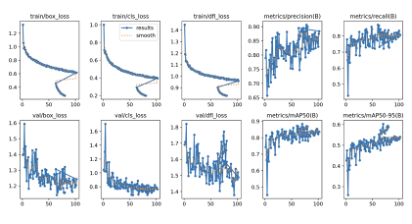

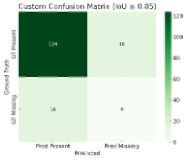

Our pipeline was built in three key stages, leveraging cutting-edge technologies such as YOLOv8, Qwen OCR models, and Large Language Models (LLMs). Each stage was validated using diverse and representative datasets, including Ambient-125, Ewaste-70, and the comprehensive TextOBB-1681 collection that aggregates public images, in-house captures, and D3 Engineering contributions. This robust dataset base helped ensure the reliability, generalizability, and scalability of Ewaste-Net across varied electronic product types and conditions. In Stage I, object detection models were trained to locate and crop text-bearing regions from images of e-waste components. Utilizing the YOLOv8 framework and Roboflow API, we iteratively trained four versions of the object detection system. Version 3, which employed data augmentation strategies like automatic tilting (±25 degrees), yielded particularly strong performance on Google Colab’s T4 GPU, achieving an F1 score of 0.8857. This robust detection capability is crucial for accurately isolating serial numbers, brand names, and model information that often appear in obscure or distorted formats on used electronics. Moving to Stage II, we integrated the Qwen OCR model to interpret the text found in the cropped regions. We conducted two key experiments here: the first compared line-based cropping to block-based cropping for OCR accuracy and efficiency. Qwen-3B was 2.4 times faster per character when using line crops, while the more powerful Qwen-32B showed an even greater speed advantage—6.7 times faster. In our benchmark evaluations, we achieved an average Character Error Rate (CER) of 0.9890 and Word Error Rate (WER) of 1.0538, confirming Qwen’s reliability in extracting high-quality, structured text from raw e-waste images. The broader significance of Ewaste-Net lies in its potential to revolutionize how we handle, recycle, and resell electronic waste globally. Rather than manually inspecting labels or barcodes—often degraded by age or wear—our pipeline automates and streamlines the entire process.

Reference:

[1] Roboflow. (n.d.). Roboflow Dataset Platform. Retrieved April 19, 2025, from https://roboflow.com [2] Jocher, G., et al. (2023). YOLOv8: Ultralytics Real-Time Object Detection. Ultralytics. Retrieved from https://github.com/ultralytics/ultralytics [3] Roboflow Inc. (2023). Roboflow 3.0: End-to-End Computer Vision Pipeline. Retrieved April 19, 2025, from https://roboflow.com [4] United Nations Institute for Training and Research (UNITAR). (2024, March 20). Global E-waste Monitor 2024: Electronic Waste Rising Five Times Faster than Documented E-waste Recycling. Retrieved April 19, 2025