Project Description

Imagine you are laying down and want to see the outside world, but are too lazy to go outside. Why not take a virtual trip through the eyes of a robot that uses a camera to capture visuals and displays it to the user’s virtual reality headset. This is done by using Raspberry Pi 4 with a raspberry pi camera module attached to pan tilt mechanism. The pan tilt mechanism will be used to mimic the user’s head movements which then changes where the camera is facing. The camera footage is streamed using Flask which will be access through URL via any device, but the device of our choosing is a Samsung Galaxy S10+. The stream is incorporated into our mobile app which uses the phones accelerometer and gyroscope capabilities to track your head’s movement. Finally, the camera module and pan tilt mechanism is attached to an RC Car to give an immersion that you are moving and looking when you are actually in the comfort of your laziness.

Hardware

- Raspberry Pi 4

- Pan Tilt Mechanism

- Raspberry Pi Camera Module

- Bread-board

- Servo Motor

- RC Car

- DESTEK 2021 V5 VR Headset

- Wires, battery pack

- Wifi Module incorporated with Raspberry Pi

- Samsung Galaxy S10+ using its accelerometer and gyroscope capabilities

Software

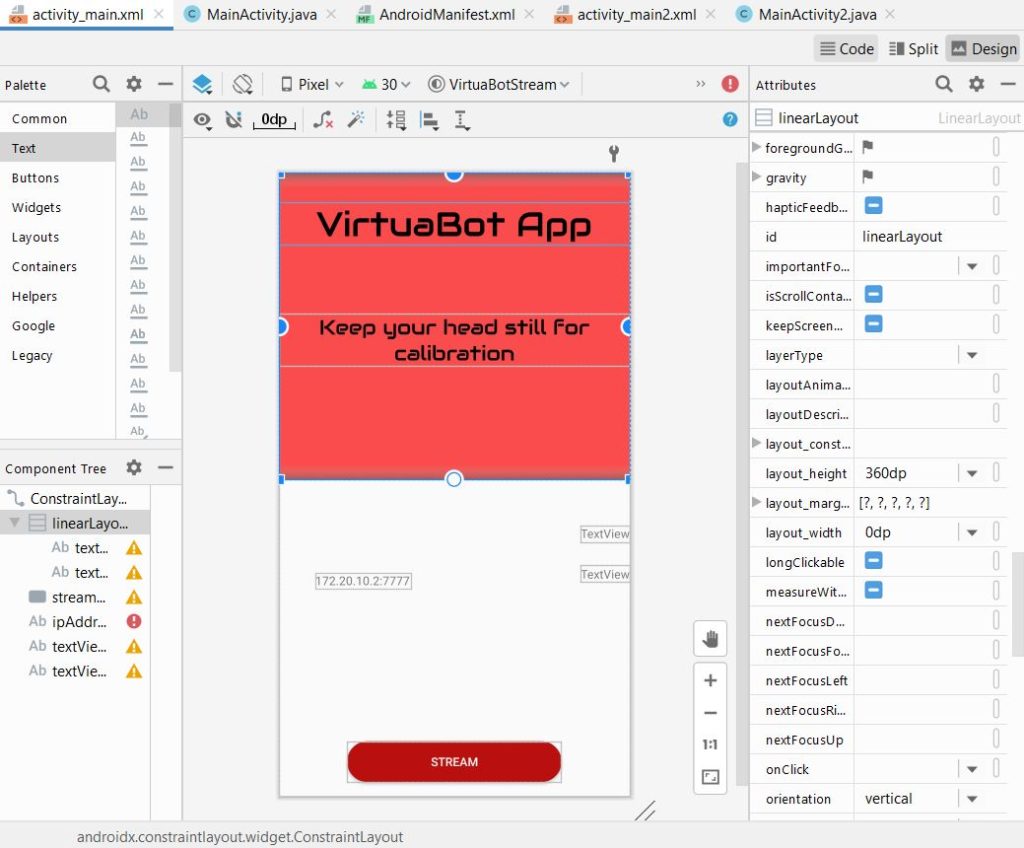

Section of Mobile App UI

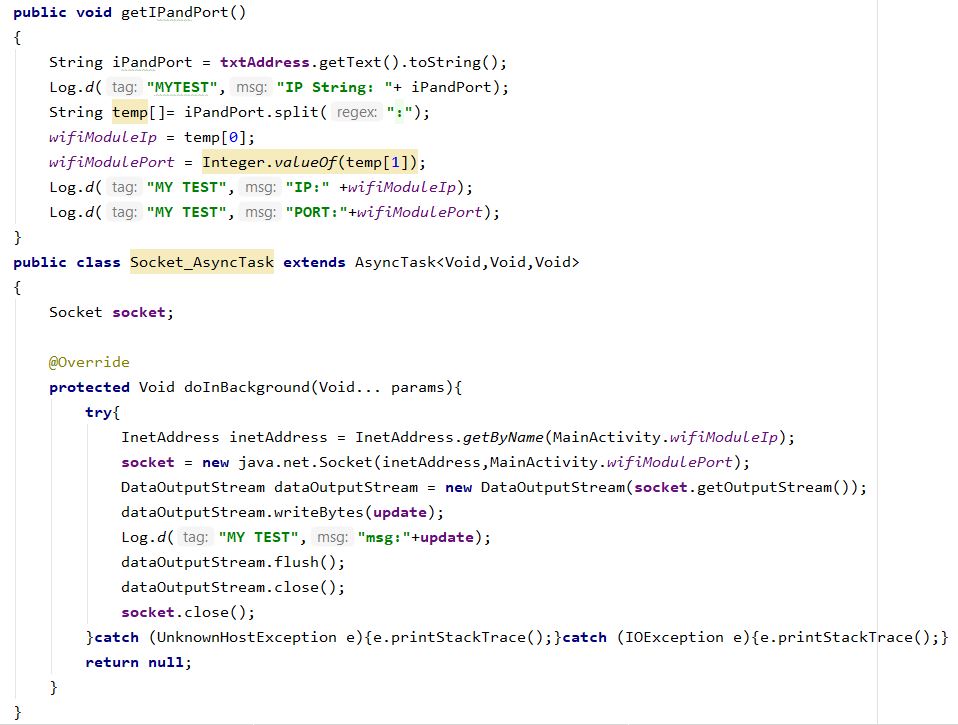

Section of Mobile App Backend

Final Virtua Bot RPI Code

#Final Virtua Bot RPI Code

import threading

from threading import Thread

from flask import Flask, render_template, Response, request

from camera import VideoCamera

import time

import os

import pan_tilt

from socket import *

from time import ctime

import RPi.GPIO as GPIO

def rpi_server():

pan_tilt.initialize()

pan = 27 #GPIO pin for RP3 used for horizontal rotation

tilt = 17 #GPIO pin for RP3 used for vertical rotation

HOST = ''

PORT = 7777

BUFSIZE = 1024

ADDR = (HOST,PORT)

tcpSerSock = socket(AF_INET, SOCK_STREAM)

tcpSerSock.bind(ADDR)

tcpSerSock.listen(5)

angle_tilt=90.0

angle_pan=90.0

while True:

print ('Waiting for connection')

tcpCliSock,addr = tcpSerSock.accept()

print ('...connected from :', addr)

try:

while True:

data = {'0','0'}

data = tcpCliSock.recv(BUFSIZE)

if not data:

break

#data= int.from_bytes(data,'big', signed=True)

data = data.decode('utf-8')

data= data.split(":")

angle_tilt += float(data[0])

pan_tilt.setServoAngle(tilt, angle_tilt)

angle_pan += float(data[0])

pan_tilt.setServoAngle(pan, angle_pan)

except KeyboardInterrupt:

pan_tilt.close()

GPIO.cleanup()

tcpSerSock.close();

pi_camera = VideoCamera(flip=False) # flip pi camera if upside down.

# App Globals (do not edit)

app = Flask(__name__)

@app.route('/')

def index():

return render_template('index.html') #you can customze index.html here

def gen(camera):

#get camera frame

while True:

frame = camera.get_frame()

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n\r\n')

@app.route('/video_feed')

def video_feed():

return Response(gen(pi_camera),

mimetype='multipart/x-mixed-replace; boundary=frame')

def run():

app.run(host='0.0.0.0', debug=False)

if __name__ == '__main__':

Thread(target=rpi_server).start()

Thread(target=run).start()Live Demo and Images

Takeaways

As we increase in our field of knowledge and our abilities to design complex systems and devices advances — we realized that user experience should always be at the forefront of the agenda. When developing this device we had high ambitions that tested the limits of our cpu and led to some runtime lag. Large amounts of time was spent rectifying this and in the process we learned several efficiency techniques (some that we weren’t able to implement) that we can carry into our profession.

Team Members

- Jewel Holt

- Ronaldo Naveo

Supervisors

- Jack Mottley

- Daniel Phinney